Comparing Aikido and Snyk for SCA Scanning

A small step towards total market coverage

For as often as it’s said that SCA scanning is commoditized, there are major differences between each of the scanners and their capabilities. These differences aren’t always clear, as it's easy to get stuck in the mire of function reachability, language support, transitive dependencies, and the list goes on.

In this article, we’ll do a comparison of Snyk and Aikido’s features, primarily covering detections, reachability, and workflows. In the long run, I hope to extend this testing out across other providers, so if you have any feedback, please let me know. If you’d like to add any further examples, please feel free to add a pull request to the repo.

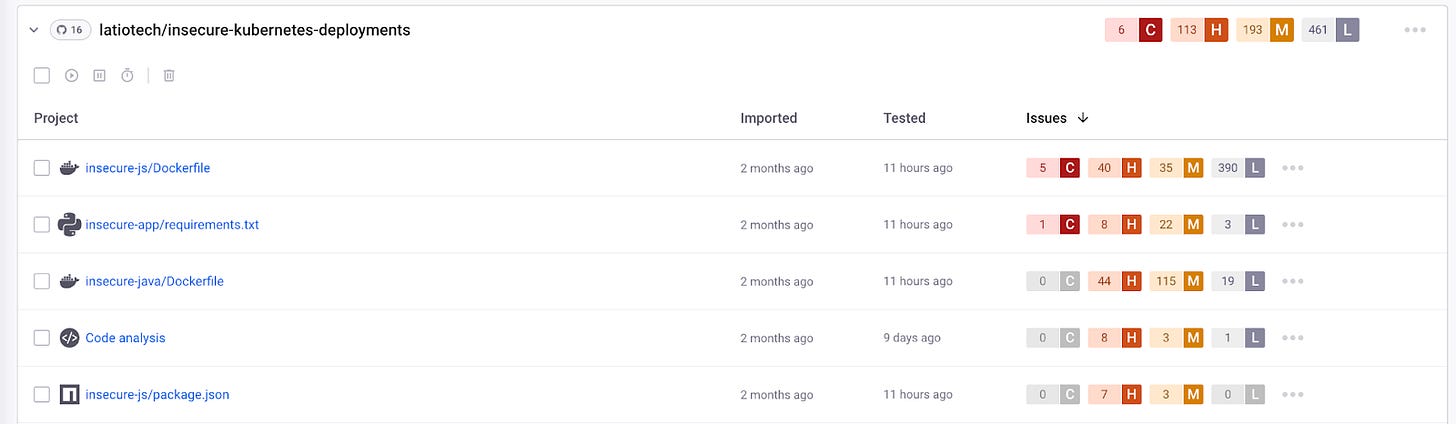

For testing SCA in my insecure-kubernetes-deployments repo, I have a few different categories of vulnerabilities. In my JavaScript files, I import three vulnerable libraries: semver, lodash, and json5 (but as a transitive dependency of babel/core, without declaring it in the package.json). In my python files, I import requests, cryptography, and flask. I use cryptography and requests in a script that doesn’t actually get deployed anywhere (in fact it’s a ransomware script, to test contextually if scanners can tell what’s happening), but I do use flask in my web app. If I did deploy that script, I would be vulnerable to 3 vulnerabilities on Requests, and 2 vulnerabilities on Cryptography.

To sum up the vulnerabilities, between Python and Javascript, there are 6 dependencies imported, 3 actually exploitable vulnerabilities, and about 400 raw CVEs. 2 of those exploitable vulnerabilities are via direct dependencies, and 1 is via a transitive dependency. From a runtime vs. static reachability perspective, if you don’t expect your scanner to know the ransomware script is never actually run (static), then there are 5 additional exploitable vulnerabilities, depending on runtime context a static scanner cannot know.

The goal of this assessment is to discover these three vulnerabilities with fewest false positives as possible. To keep things simple, the repo was scanned only via importing the code via Github.

Testing Results

For understanding these results, the goal is a low overall vulnerability count (low false positives), without missing any vulnerabilities as a result (low false negatives).

*That really large light blue bar is Python Cryptography. It turns out cryptography vulnerabilities are complicated (see appendix, and data breakdown). Both tools ultimately suggested the same upgrade to fix everything, but the appendix has a fascinating breakdown of different scanner results due to the OpenSSL wheel used when building different versions of Cryptography.

Detection Results

From the above results table, Aikido appears to be doing slightly more advanced reachability analysis, but especially compared to Snyk’s non-enterprise tier which does not include reachability. Additionally, reachability varies widely by language, so these results may differ across different languages, this test was only JavaScript.

Here are some very important caveats!

The Cryptography package attributed to the large skew in percentages, and those findings are incredibly debatable (see appendix). That’s why the graph adjusts for this package.

My sample size is really really small, but speaks to the complexity of scanner comparisons. Digging into vulnerabilities and determining reachability is no small task, which is why I typically emphasize patching over determining exploitability. Believe me, I could’ve patched these packages in much less time than figuring out any one of these vulnerabilities.

There were more irrelevant Lodash findings from Snyk, but those findings were from their original research. That’s why the lower percentage for true positive rate could be viewed as a pro or a con: Snyk has a surprising amount of original vulnerability research, which is why their write ups are typically well referenced in practice.

Aikido reduced their findings to 4 actual patches that need to be applied, whereas Snyk lists all of the findings as unique, making it harder to find what changes actually need to happen. However, on the fixes tab, Snyk shows a total of 6 fixes, with the additional 2 being asking to pin transitive dependencies in Python which also have vulnerabilities. I don’t want to overblow the differences here, but Aikido’s interface was slightly more intuitive for a developer just looking at what packages need an upgrade. But to be clear either tool gave the data necessary.

Snyk has some findings that aren’t related to CVEs, but are related to their own research initiatives. One fantastic researcher you should follow is Liran Tal who helped review this report, has excellent books about secure NodeJS coding, and knows more about JS than I’ll ever forget. The two additional Lodash findings from Snyk are not tied to a CVE, but have small write ups on Snyk’s page indicating they were taken from some published research. In this case, these vulnerabilities were not related to my code so contributed to the false positive rate; however, most vendors do not use these proprietary findings in their scans.

Aikido does some of their own proprietary research as well, but it didn’t come up in this testing. Their research is based on very cool open source analysis with LLMs with some unique findings. All of this really points to the overall challenge of a unified vulnerability database, but then we’re getting off topic.

When it comes to detection, Snyk and Aikido’s capabilities are rather similar. From an outcomes perspective, they both suggested the same patches, which is what really matters. It is worth noting that Snyk’s reachability analysis requires enterprise licensing, but that Aikido’s reachability ever so slightly reduced more vulnerabilities. With prioritization, both tools have their own methodology for increasing or reducing the risk of specific vulnerabilities. Snyk had some cool insights around contextual vulnerabilities and assigns custom risk scores, while Aikido was a little more aggressive in changing severity based on proof of concepts or vulnerability type.

The Snyk Enterprise UI makes it more clear that the risk score is being adjusted based on reachability

Summary: Very slight edge to Aikido based on pure reachability percentages, but Snyk has more original vulnerability research.

Workflows

Now that we’ve tested the detection capabilities, let’s take a look at workflow options for getting things fixed. For Snyk, I get a list of files with vulnerabilities attached. The findings are prioritized mostly on CVSS score, but for some of the vulnerabilities Snyk has their own writeup with scoring. Snyk chooses a risk score per vulnerability as their prioritization methodology. It’s a minor difference, but from a developer perspective Aikido rolls up the idea of these “risk scores” and assigns an overall severity to upgrading the library. In Snyk, I have the necessary fix and exploit data, as well as guidance on what versions I can migrate to with “Fix advice.”

I like the way that Aikido groups the findings more, but the results were basically identical between the Fix Advice tab and what Aikido was showing. Similarly, I can see the necessary fix versions and options for patching, as well as open tickets or Jira for remediation. Snyk however had slightly more detailed write ups for vulnerabilities where applicable, but the overall descriptions were generally comparable.

From a workflow perspective, Snyk appears to offer opening a Jira request per finding rather than per fix, which can be annoying for developers. This can be an annoying workflow because their action item is to patch a dependency, not resolve single vulnerabilities at a time. They also offer pull requests which bump the versions, but these tend to really clog up developer PR boards from my experience as they require testing before merging. Snyk does however allow grouping all of the changes into a single PR, which gives developers a starting point for patching while minimally clogging up the PR tab. I only bring all this up for completeness sake though, as I don’t find auto-PRs for patching to be much help unless they’re more holistic.

Aikido combines the findings into a single ticket to upgrade a dependency, which is a much more understandable developer workflow. They also offer automatic pull requests like Snyk. The Slack integrations are similar, but Aikido also offers a weekly report via the integration.

Summary: Aikido for better tickets, but Snyk had more vulnerability details at times

Overall, the capabilities between the platforms are very similar, with Aikido providing some nicer grouping and automatic prioritization, but Snyk having a slight benefit with some of their original vulnerability research. Ultimately, I found Aikido’s grouping much more logical from a developer perspective, letting me know exactly what needs to be remediated. Most of the choice between the platforms should come down to overall platform needs rather than SCA functionality specifically.

I want to especially thank Liran Tal from Snyk and Willem Delbare from Aikido for their careful reviews of this comparison and feedback along the way.

Appendix Cryptography Breakdown:

Okay, whats-up mega nerd. Now that the CISOs have stopped reading, we’re going to really breakdown the absolute insanity of trying to do comparative tool CVE analysis. The Python Cryptography package bundles in pieces of OpenSSL within itself for specific functions and functionality. What version of OpenSSL is used seems to depend on some versioning differences based on what package someone is downloading. Here is where the OpenSSL versions are declared. I’m pretty sure these versions become the different distributions here.

Both Snyk and Aikido picking these up I can only assume is based on how their scanners work on the backend, with which CVEs they spotted correlating to which packages they scanned. Both vendors seemed surprised that they spotted these, rather than at the OS level.

My personal take is that all of these should be false positives, because you can even set an env flag to tell cryptography which OpenSSL version to use. This is another point in my mind to the strategic value of runtime reachability analysis, as well as the overlap between SCA and Container scanning, as there’s no way to know pre-build what version is specified.

Full Comparison Spreadsheet is here for the nerdy deets.

Solid analysis, James!

Interesting! Thank you! Are you by any chance aiming at publishing the results to GitHub in a/some way that might make it easy to do some comparison ( spreadsheet 😝🤣 ) with other and or future tools?