Emerging Categories: The Evolution of AI SOC

Part one of a series reviewing emerging tools categories going into 2026

This post was completed in collaboration with the team at Mate Security who let me use their product to show their AI SOC capabilities and asked me to speak honestly about the platform.

As an emerging category, AI SOC has earned mixed reviews and seen massive changes over the last year. On the one hand, like most AI tooling, when it works it feels magical, delivering unique insights and automation. On the other hand, too often the tools create instant friction as they lead security analysts down wrong paths while driving up costs with inefficient queries.

This article will cover the challenges that arrived with the first wave of AI SOC tools, and how a second wave of AI SOC tools are addressing these concerns by delivering a more AI native user experience. I argue that this transformed user experience gets us much closer to the outcomes we expect from AI.

As a quick aside, there is a third kind of AI SOC tool which functions more as a complete data platform, two examples being Exaforce and AI Strike, which won’t be the subject of this article because there are far fewer vendors doing it, and the use case is more robust.

The Challenges with AI SOC Tools

It’s always challenging to be the first mover in a new category. The first generation of AI SOC tools revealed some early challenges in applying LLMs to SOC workflows:

Summaries that don’t add additional value to the initial alert

Running data enrichments that are cheaper done natively in the SIEM

Leaning on verbose summaries rather than actionable guidance

Increasing tool costs through unoptimized queries

Require manually building and maintaining accurate knowledge bases

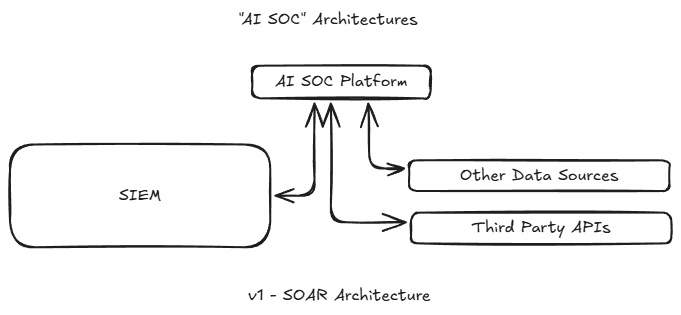

These tools should be commended for their big experimental bets, but the investments were often missed due to being too much like SOAR platforms compared to providing a true copilot experience. In the worst case, these summaries just provide additional layers of abstraction before needing to click into alerts anyways. For example, oftentimes in the tools that I have used I find myself going to the JSON responses themselves rather than trusting their AI summaries.

Many first generation AI SOC platforms have ended up closely related to traditional SOAR platforms, being used for specific enrichment or automations. Leading SOAR providers like Tines and Torq have been able to quickly incorporate AI into their tooling, further diluting the differentiation.

At their best, these tools can fetch unique insights, correlating data that otherwise an analyst would miss; but at their worst, they can spin in circles investigating meaningless information while driving up costs, like trying to look up the IP abuse status of a localhost domain.

How New AI SOC Tools are Different

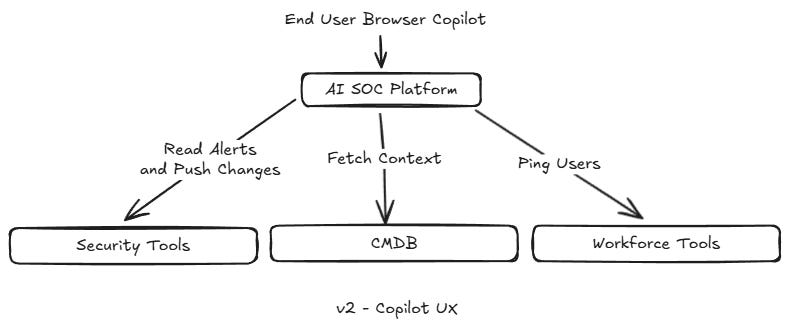

A second generation of AI SOC tools offer a glimpse of future AI SOC workflows, functioning more as contextual copilots than SOAR + AI. These SOC copilots are the first tools that provide a “Claude Code but for SOC” experience rather than a “here’s some text we generated that could be possible be helpful” one.

The fundamental features of these tools are what’s setting them apart and up for success:

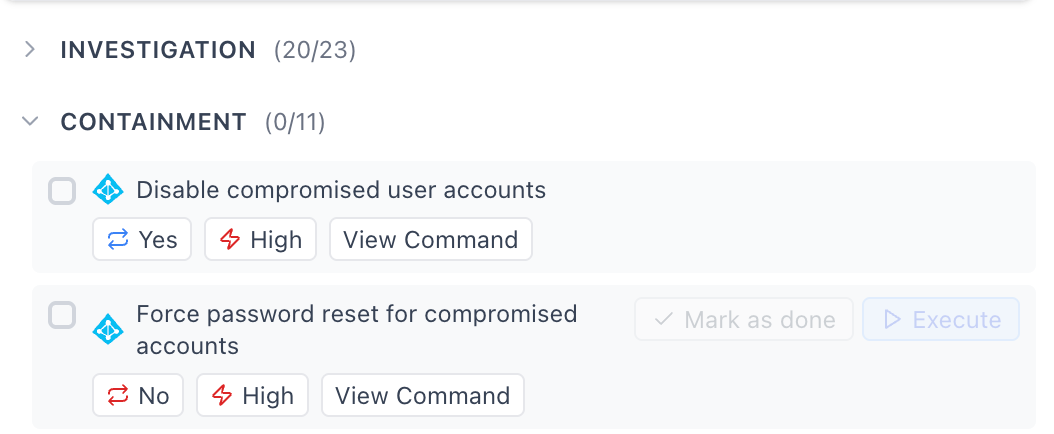

Taking actions on behalf of the security analyst, for investigation and response

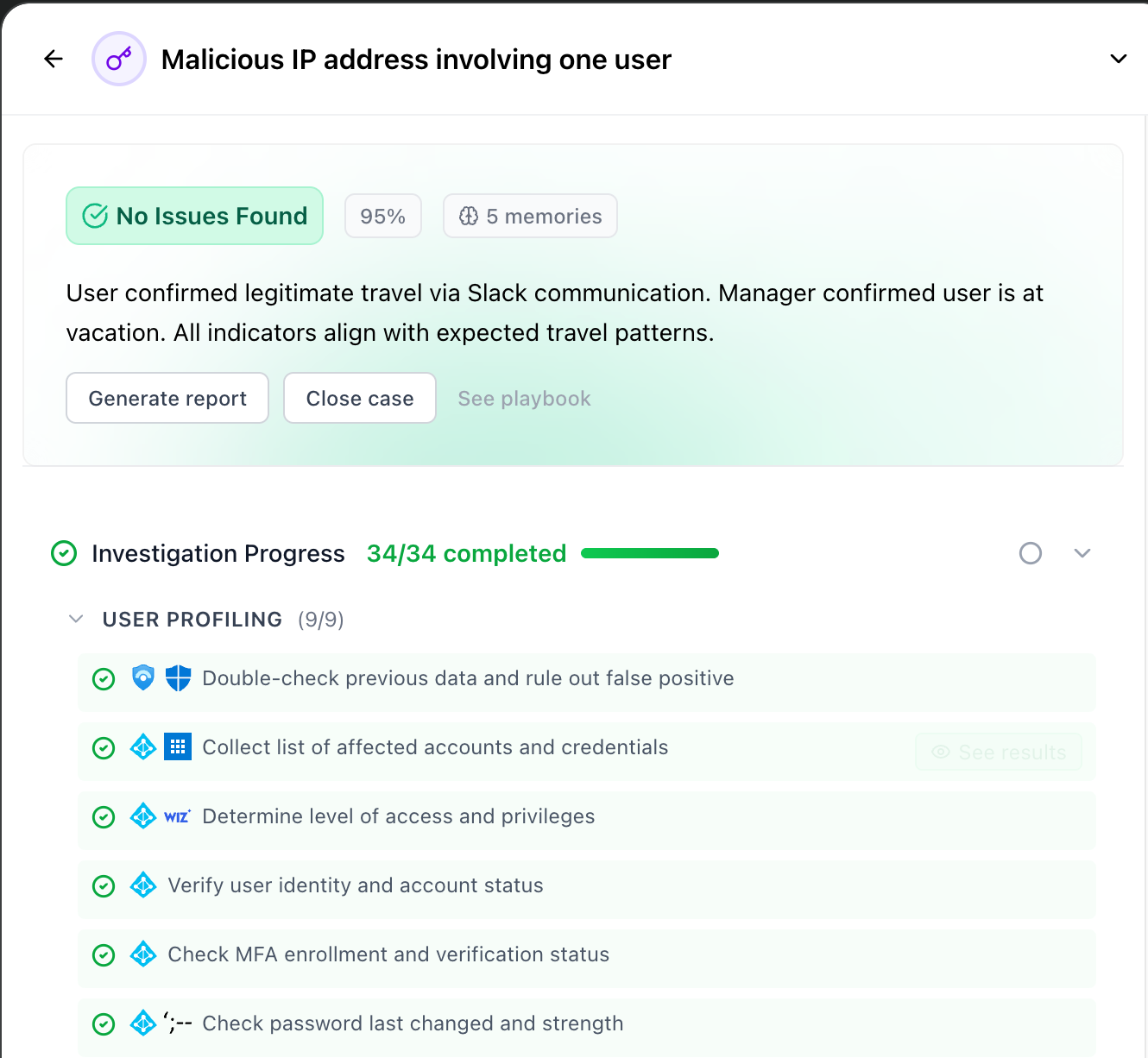

Investigating alongside the analyst rather than trying to provide a complete summary at the end

Continuously learning and applying organization context

Giving the analyst complete control over their workflows as they’re happening

Fine tuning agents to enable autonomous actions

The leading tools in this new category are Mate and Legion, and I was able to get in depth most recently with Mate, so this article will focus on their methodology in order to demonstrate the differentiators in this new generation of tools.

First Differentiator: Continuous Learning

From my experience working at an MDR provider, mapping organizational contexts is one of the largest challenges in security operations. Constant updates are needed to track who is responsible for an alert, what common alerts occurred, and what to do in various situations. For example, imagine having one customer who uses different DNS resolvers based on environments, and the headache that creates trying to keep analysts up to date! In the context of AI SOC, continuous learning takes on these hurdles in two forms: building an organizational knowledge base, and fine tuning various agents to excel at different tasks.

When it comes to building an organizational knowledge base, the basic version has been creating a library of text that the agent can look at before deciding on an alert. The problem is that these pieces of information enter the context window too late - when the agent has already made several decisions.

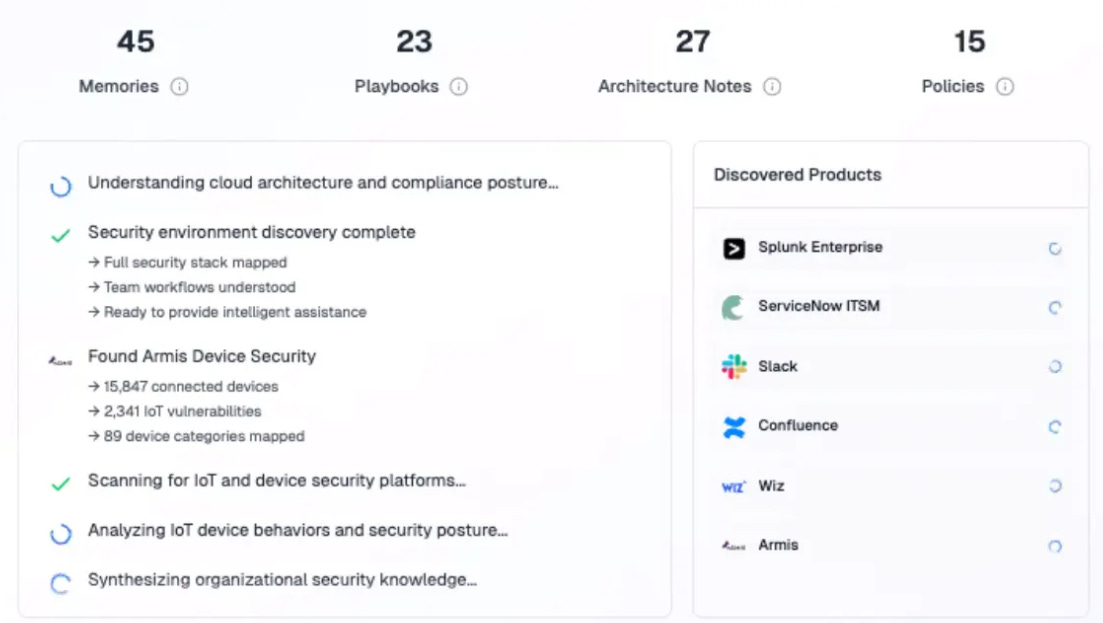

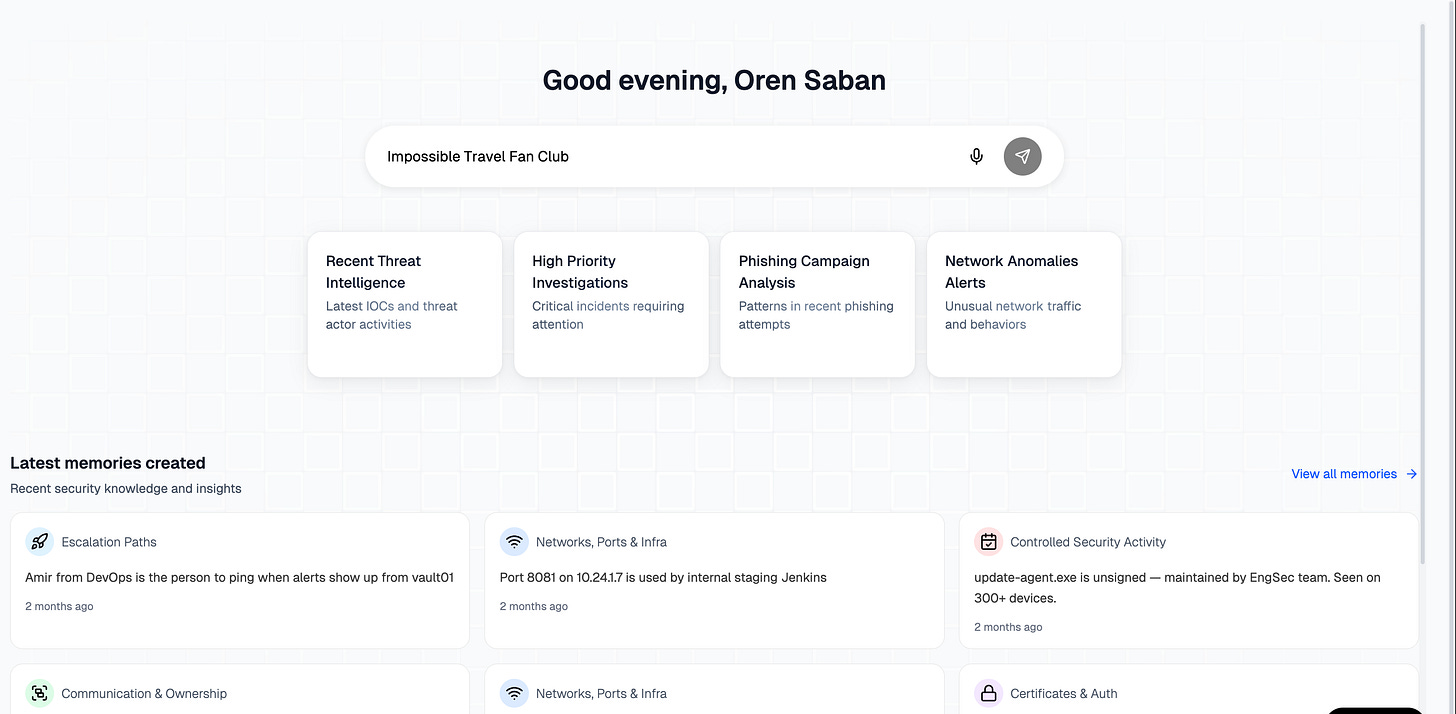

Solutions like Mate instead integrate across your tools to automatically build out the context the agent will need later on - whether it’s asset ownership information, or information about your environment. What stood out to me about Mate’s approach to integrations is how it looks up data via user accounts, rather than relying on a series of bespoke integrations.

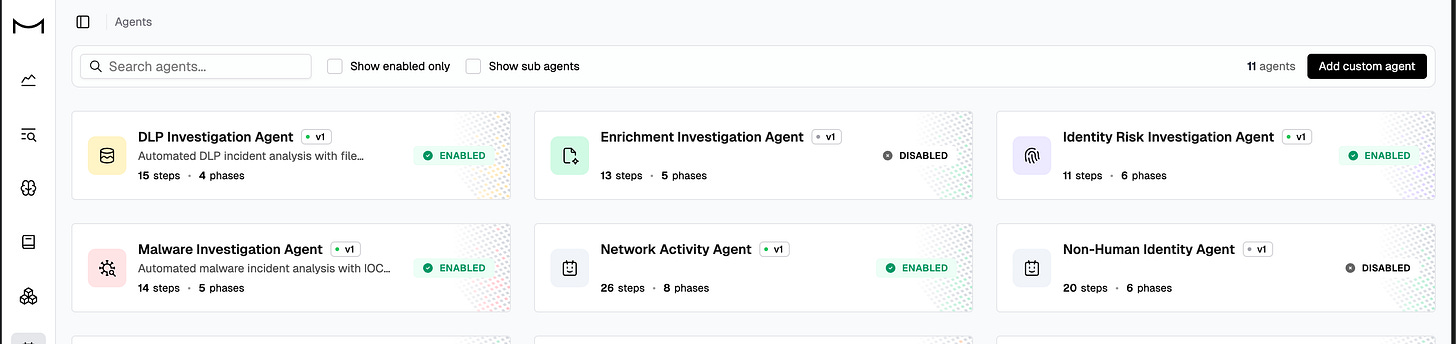

The other aspect of continuously tuning these tools to your environment is being able to configure agents to create better outcomes. Many AI SOC tools create lackluster results, as their context window becomes bloated with unhelpful information. Mate offers the ability to create and manage custom agents, giving them relevant tools and knowledge bases. This feels more like a next generation of SOAR - tweaking agent behavior rather than APIs.

Mate’s one of the first tools I’ve seen in this category with the amount of customization necessary to roll out automations at scale.

Second Differentiator: Copilot User Experience

The second major aspect experienced in the first wave of tools was it lacked a copilot experience, and introduced a dashboard-y one. Unfortunately, most early AI SOC tools end up with this experience, offering yet another alert board. Or as I always affectionately call many tools - a SIEM for your SIEM.

Another differentiator coming from the second wave of tools like Mate is focusing on a user experience that helps with investigations: not just summarizing what happened, but giving you actually helpful pieces of information along the way. I can’t help but compare this to the generations of AI coding tools - moving from copy pasting code in and out of ChatGPT to using Claude Code or Cursor to work alongside AI to get to the end result.

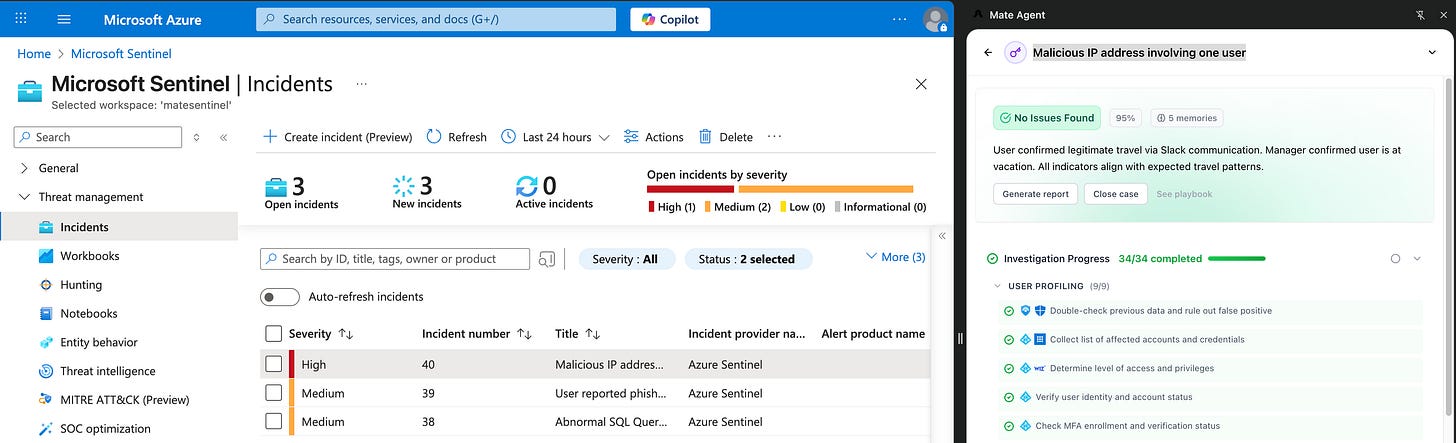

This shift in user experience is what has finally made me open up to the category as a whole - not expecting the AI to be perfect, and instead building a UX that is always useful. This comes across first with investigations, and then fetching additional data to enrich the incident. The most helpful automation is sending a Slack to confirm common anomalous safe alerts like impossible travel.

Again like coding assistants, the UI/UX also moves with the user to remediation actions - allowing you to take multiple actions based on what might be the most beneficial. It’s clear that moving these into the browser proves helpful, as teams can take more flexible actions based on the specific alert, instead of relying on general summaries of what to do.

Conclusion

Overall, I’m excited to see what’s next within the development of these more copilot-like experiences from AI SOC tools as they’ve learned from the SOAR + AI challenges that the first wave of tools dealt with. I look forward to sharing more insights about these AI SOC tools once I have more hands-on experience with them, but what I’ve seen so far is very promising, offering increased speed and precision to analysts rather than seeking to wholeheartedly replace them.