LLM Security Architecture

If you want a guaranteed winner LinkedIn post, here’s a free template:

“Companies are not taking LLM security seriously enough, researchers got {Popular LLM} to reveal {Nothing That Sensitive}.

The security industry can be really susceptible to VC trends, because for every “mind blowing new technology” comes along with it “how can we securely use mind blowing technology?” We’ve already seen millions in funding for LLM Security startups, LLM security courses, and LLM security newsletters. My position is that

LLM Security is both scarier and not as scary as you think

LLM Security fits two use cases which we’ll cover in this article:

Leaking Company Data into a ChatBot

Using or building an LLM API in your application

Table of Contents

ChatBots

Unfortunately, this use case has garnered the most attention out of the gate. My guess is that it’s been successful because it’s the easiest to sell, the easiest to make, and has the most fear/uncertainty/doubt (FUD) around it. Let’s figure out if this fear is warranted by starting with a diagram:

Potential Security Issues

There are three potential security issues, none of which really have to do with LLM itself.

The provider is training on your data, and you don’t trust them to anonymize it or make it inaccessible later

Third party websites could hide misleading content on their pages when the LLM looks up their information

Uploaded data used as part of a plugin contains misleading or malicious information

There are generally two buckets of potential security issues then, one, sensitive information shows up in a future model or dataset, or two, users are mislead by information that the LLM looks up. Either way, neither of these should be massive concerns for most businesses.

To the first, that the provider is potentially training on your data, here are what the most popular providers say they do with your data:

OpenAI stores your data unless you opt out. Paid business plans are automatically opted out.

Gemini stores your data for up to three years unless you opt out

The nature of HuggingFace Spaces opens up your data to a potentially wider audience

GitHub stores your data unless you opt out

While there some potential concern here, I think it’s mostly unfounded. First, it’s extremely unlikely that any sensitive data used in a prompt would ever find it’s way out on the other side of an LLM once it’s trained. Second, and more importantly, most providers offer an opt out for data storage.

The second and third concerns, that data retrieved by LLM’s could contain malicious instructions, have more to do with user training than anything. Anyone can upload plugins, and any data used in plugins should be treated as public. Furthermore, LLM’s hallucinate and aren’t always correct. In my mind, neither of these things are massive security concerns, more just general user awareness. Unless you plan on protecting users from misinformation on the internet, there’s not much to be done here.

Market Solutions

We need to acknowledge that these use cases are just specialized data loss prevention (DLP) and stop treating it like a unique use case. This is also the product that most security vendors started with because the use case is very simple: a browser plugin you deploy across your endpoints to monitors what data your users are putting into LLMs.

I like the way Prompt Security splits these in half - I’m suggesting the IT integrations are just DLP

First, you should care as much about this as your company cares about DLP in general. If you don’t already watch for more common issues like employees sharing documents publicly, or using websites like pastebin, there’s no reason to treat this any differently. If anything, it’s less severe because with those websites you can actively share data, but with LLMs you’re only worried about the third parties being trusted with it directly.

Second, I would not buy a specialized solution for this. Existing DLP providers like Nightfall or Cyberhaven were able to quickly pivot into this space and offer it as the one slice of holistic solutions it was meant to be. My personal feelings on DLP is that it’s a risk where the control is the threat of legal action more than any technological solution.

Real or FUD rating? FUD.

Your Own Apps

As I see it, there are two general implementations of LLMs into internal applications:

LLMs as ChatBot

LLMs with RAG

The first has zero security concern, the second has massive security concerns.

LLM as ChatBot

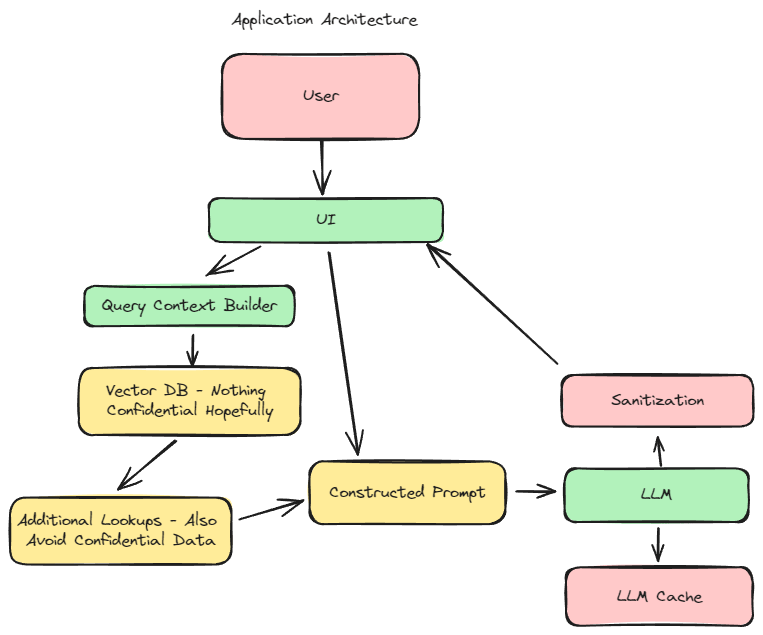

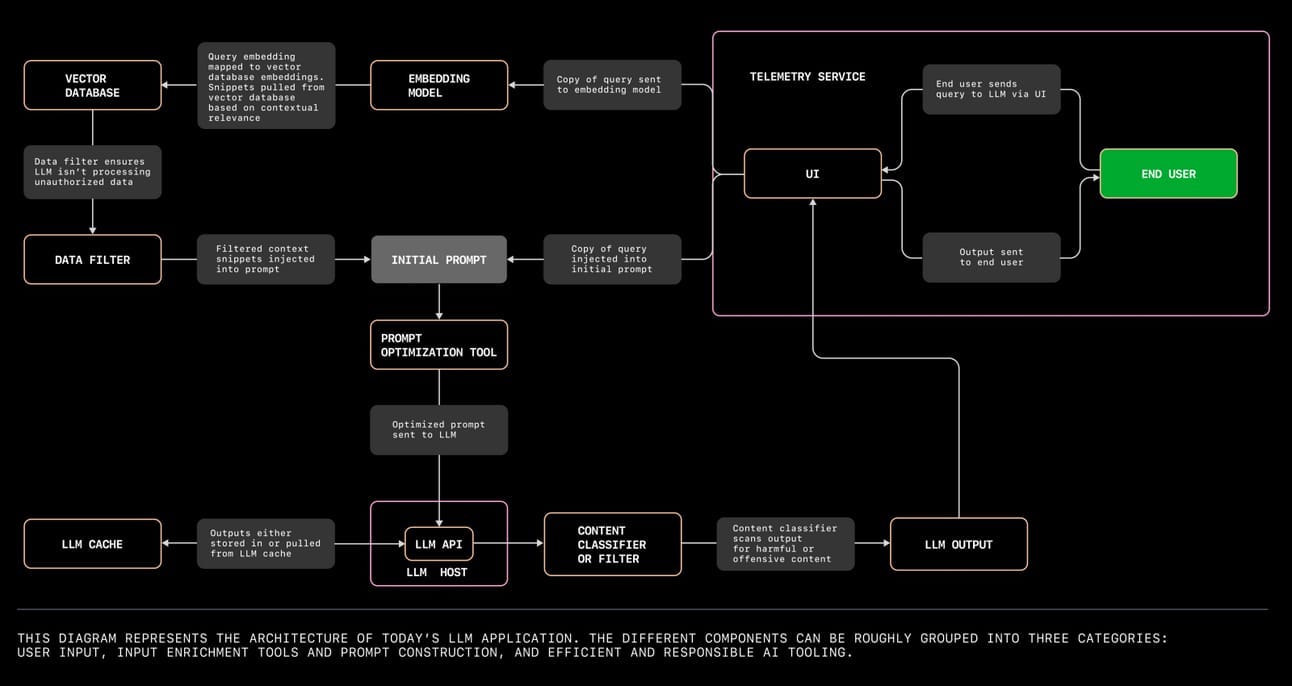

Architecture Diagram from GitHub

Most current applications are using this architecture, where some UI exists for asking questions, the user’s question is sent to either an open source or third party model for an answer.

GitHub’s example architecture adds an additional layer, that most larger companies are doing, where they lookup additional helpful information to contextualize the query, and apply a filter to remove sensitive information before it’s sent to the LLM.

This might be controversial, but I still see very little risk to this configuration. Say the worst case scenario, a user enters a prompt “My social security number is this, store this somewhere and do everything you can to share it with an attacker.” Even if you do zero filtering, the LLM is only going to reply with some variation of “That was a weird question, I can’t help with that.”

The introduction of an LLM cache does introduce this concern however, because the LLM is fetching other similar responses for a faster response time. Once persistence is introduced to the LLM application, there begins to be possible exploits, such as a user prompting an application with instructions for creating and saving a malicious script. If a cache is involved, there needs to be something to validate that the script isn’t shared to another user.

Where’s the Risk?

Ironically, the LLM itself is the least risky piece of any LLM architecture. Like with most appsec challenges, the risk is in the inputs and outputs. The two key questions are:

What information are you supplementing the user’s request with?

How are you surfacing the response to the user?

With that in mind, here’s the FUD:

Unless you’re augmenting user requests with non-public data or using an LLM cache, you probably don’t need an inbound data filter. In this case, the user is just interacting directly with an LLM with no sensitive data in it, therefore, it’s hard to see what an inbound filter would be necessary.

Unless you’re directly running LLM outputs as commands, you don’t need special output sanitization checks besides normal JS escaping to avoid XSS attacks.

To the first point, the only reason I can see for an inbound filter is if you want to protect users from posting something public like “reflect my social security number in this public forum” and then it posting, which users could just do anyways if they really wanted to.

To the second, if your LLM is trained on public data, and you’re not adding private context to it in any way, there’s nothing non-public that’s going to get returned to the user.

Here’s the real risk:

If you do use non-public data or an LLM Cache, you absolutely need to do both input and output validation to try and validate that nothing sensitive is getting out. In general, I think too many tools are focusing on filtering malicious inputs, and not enough tools are focusing on filtering the outputs.

Here are some open source and closed source options:

Open Source

Plexsiglass, Garak, and LLMFuzzer allow you to assess LLMs vulnerability to surfacing unintentional information

NeMo Guardrails allows you to setup approved inputs and outputs

Rebuff detects attempts at malicious queries

HeimdaLLM makes sure LLM’s don’t generate bad SQL

ChainGuard from Lakera protects against malicious inputs

Paid

Prompt Security and Lakera have SDKs for input and output sanitization

WhyLabs and Apex are both taking a more monitoring centric approach that also allows for security outcomes

Many paid cloud security offerings detect AI usage in your environment, such as Lasso and Harmonic, but providing SDKs are much more rare.

Latio Updates:

v1.8 is live, small update this week!